Technical

28 Dec 2021

Deep ML cannot be built in-house

Author

Ilia Zintchenko

Co-founder and CTO

We have heard multiple arguments over the last years that ML should be built in-house. From investors, customers, books and even candidates we have interviewed. However, the arguments for this opinion are often exactly the same as the arguments for building software in-house. Although developing software in-house takes time and is more costly, the gain in flexibility and performance that it provides over off-the-shelf, general-purpose alternatives is often worth the price.

Many of those same arguments also apply to machine-learning models in cases where the cost of mistakes is small, the proportion of outliers is negligible and there is a human-in-the-loop that handles cases where the model is not reliable. In this regime, models can reach sufficient accuracy only using in-house data and be deployed by a couple of data scientists wielding off-the-shelf tools. The main goal here is nearly always to reduce costs by automating simple processes or flagging cases that most need human attention. A classic example is in customer support, where a model can address the most common inquiries and defer the rest to human agents.

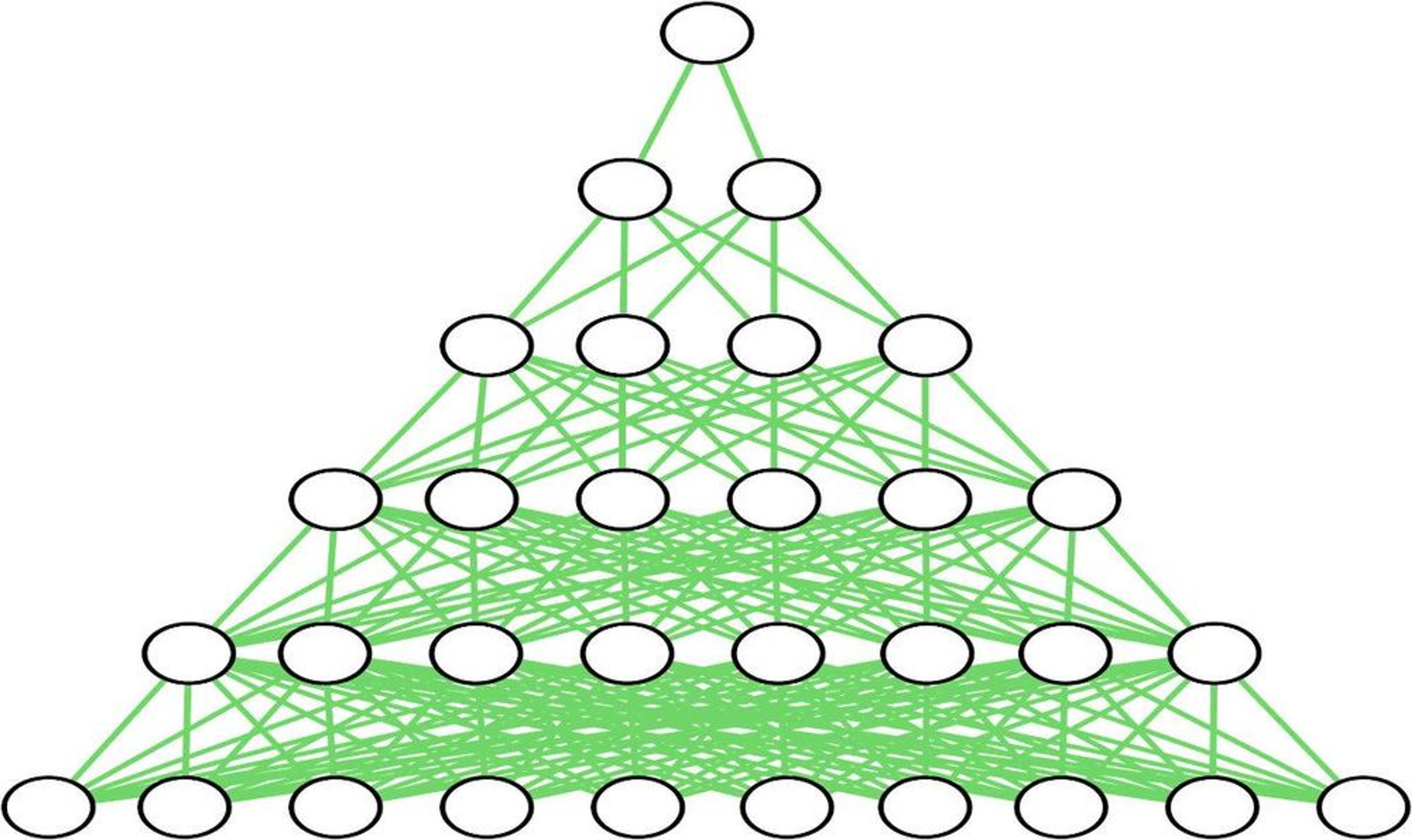

To go beyond cost-cutting and into the domain of full autonomy, a model needs to be reliable in situations that are far from the typical ones that it was trained on. As we outlined in a previous post, in this regime in-house data is not sufficient and data from multiple distributions across a network of organizations is required. The performance gain from accessing such external data can outweigh any form of counterparty or competitive sensitivity risk.

Deep in the insufficient in-house data regime is the problem of understanding financial transactions. As we described previously, programmatically making decisions driven by insights hidden in transaction data can unlock new applications that have never before been possible. However, the cost of each mistake far outweighs the gain for each correct decision. In the US alone, more than 10k companies are established and many thousands shut down daily. New transaction patterns appear and disappear on a regular basis. The customer-base of businesses is becoming increasingly global. Reliably processing transactions across a customer base that is growing both in size and diversity requires a richer stream of data than any single fintech or even traditional bank has in-house. In this regime, the generality and robustness of a model is critical and building in-house is an uphill battle that will at best result in a brittle model that fails to handle the continuous changes in transaction patterns and merchant types.

At Ntropy, we have models that train in real-time on transactions from hundreds of businesses, across a range of countries and languages in North and South America, Africa, Australia and Europe. For many applications, our APIs are already outperforming anything available on the market, and we’re just getting started. Our goal is to solve transaction intelligence globally and enable a new generation of companies to build products and services powered by this data, ranging from automated revenue based-financing to hyper-accurate recommendations. Learn more about what you can build with Ntropy here and start exploring our API here. Happy holidays!